How Does AI Awareness Alter Our Human Self-Perception?

Good morning!

First off, my apologies for the delay in updates recently due to being very busy.

Today's topics include:

How is the emergence of AI shaping our understanding of ourselves?

Considering LLMs as new subjects in psychological studies, how do we assess their cognition?

Our perceptions of AI messages and images.

The increasing use of AI in job interviews: its impact on applicant behaviors and impression management.

Finally, we'll explore two AI-related discussions on scientific research.

RESEARCH RECAP

With the advent of Generative AI and the evolving AI Agents, characteristics once considered uniquely human, such as language, logic, and rationality, are increasingly being manifested by these artificial agents. If we consider them as a distinct type of entity, how will this change our self-perception, and how will we regard these entities endowed with intelligence?

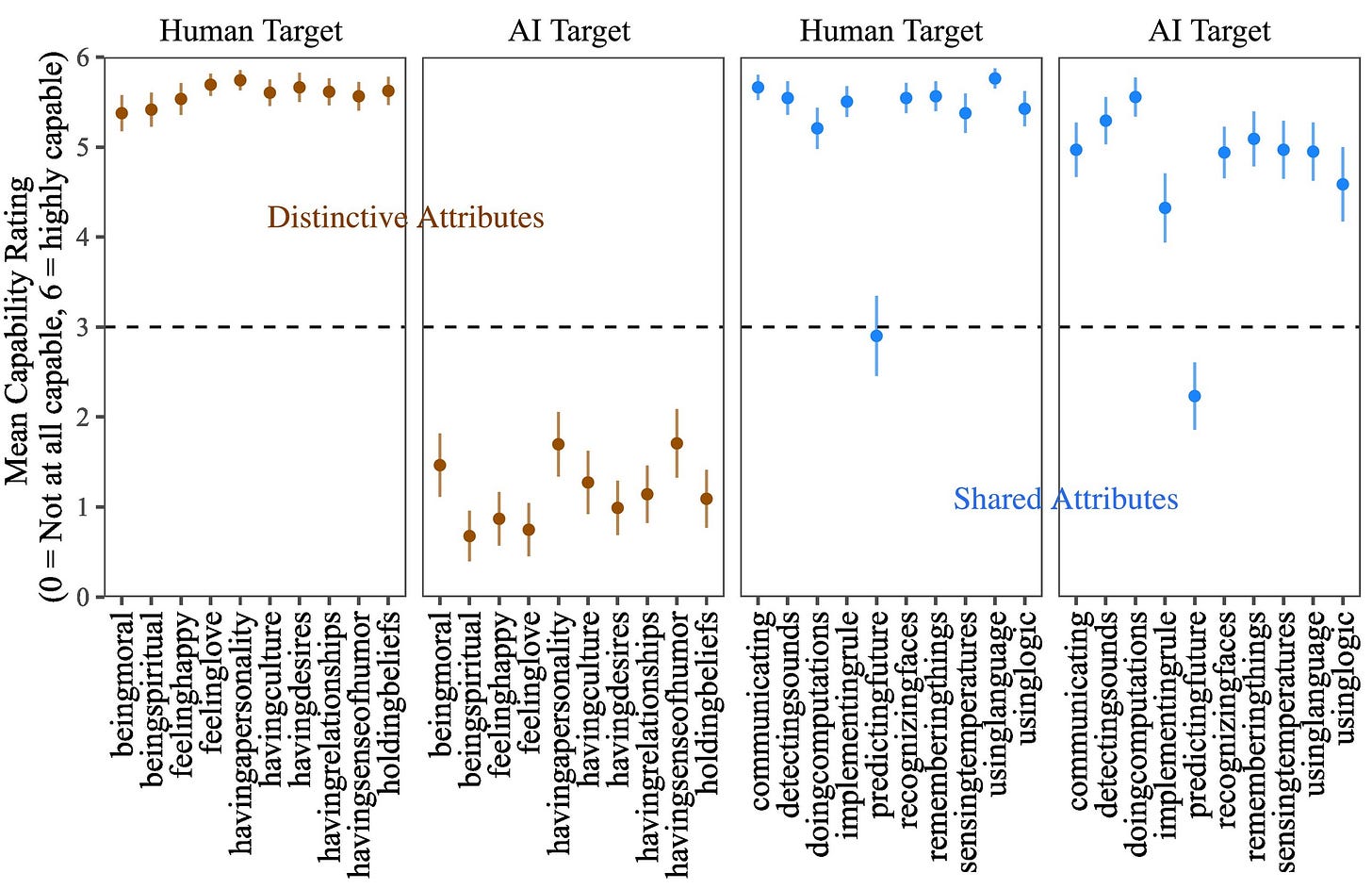

This research1 delves into how advancements in AI reshape our perceptions of human nature. It categorizes human attributes into two groups: those shared with AI and those unique to humans.

The method involves a series of studies that examine how people perceive human attributes in light of AI advancements.

Key Findings:

Study 1: Identified two types of human attributes: distinctive (unique to humans) and shared (common with AI).

Study 2: Reading about AI advances made distinctive human attributes seem more essential to human nature.

Study 3: Confirmed the AI Effect; distinctive attributes rated higher after AI exposure, not due to anthropomorphism.

Study 4: AI Effect persisted even when controlling for demand bias; distinctive traits seen as more human-essential.

Study 5: Simple AI mentions, implying human obsolescence, were enough to elevate the importance of distinctive traits.

The study reveals the AI Effect, where exposure to AI leads people to place greater importance on human attributes distinct from AI. For more information, please see the full article.

NEWS FLASHES

PSYCHOLOGY → AI

Psychology Research Based on Anthropomorphized AI Models

Best Practices in LLM Cognitive Assessment

This paper2 discusses the evaluation of cognitive abilities in large language models (LLMs). Using case studies, it identifies common pitfalls and effective strategies, focusing on prompt sensitivity and cultural diversity to enhance AI Psychology assessments.

Cognitive Dissonance: LMs and Truth Assessment

The research3 contrasts querying and probing methods in LMs for truthfulness assessment. It identifies types of disagreements and shows that probing generally leads to more accurate results, providing insights into LMs' representation of truth.

Virtual Agents: Improving Emotional Perception

The study4 presents a new approach to improve emotion perception in virtual agents. It emphasizes the creation of consistent, expressive multimodal behaviors, with findings suggesting that emotional consistency across modalities significantly enhances the agents' communicative effectiveness.

AI → PSYCHOLOGY

Psychological Studies Arising from Human Interactions with AI

Source Disclosure's Effect on AI Message Evaluation

Studies5 reveal that revealing an AI source affects how people evaluate health messages, showing a slight bias against AI. This effect varies with individuals' attitudes towards AI but doesn't significantly change message preference.

Impact of Zoonotic AI Design on Consumer Adoption

Research6 shows consumers less likely to adopt AI services with animal-like designs than robotic ones. This reluctance is linked to cognitive challenges in associating animal traits with tasks, but aligning animal characteristics with suitable tasks can reverse this effect.

AI Interfaces Influence on Interviewees' Impression Management

Study7 explores how AI in video interviews affects candidates' behavior. Different AI interfaces impact honest and deceptive impression management, and can also reduce interview anxiety.

TOOLS TREASURE

AI's Role in Uncovering Research Blind Spots

This article explores AI's growing ability to formulate scientific hypotheses, potentially revealing overlooked areas in research. It emphasizes AI's evolving sophistication in scientific inquiry. Discover more [Nature].

AI Science Search Engines: Quantity and Quality

The article addresses the surge in AI-driven science search engines, questioning their effectiveness in aiding research & summarizing scientific findings. It explores the promises and challenges of these AI tools. [Nature]

The AI Effect: People rate distinctively human attributes as more essential to being human after learning about artificial intelligence advances. (2023, July). Academic Press. doi: 10.1016/j.jesp.2023.104464

Ivanova, A. A. (2023). Running cognitive evaluations on large language models: The do's and the don'ts. arXiv, 2312.01276. Retrieved from https://arxiv.org/abs/2312.01276v1

Liu, K., Casper, S., Hadfield-Menell, D., & Andreas, J. (2023). Cognitive Dissonance: Why Do Language Model Outputs Disagree with Internal Representations of Truthfulness? arXiv, 2312.03729. Retrieved from https://arxiv.org/abs/2312.03729v1

Chang, C.-J., Sohn, S. S., Zhang, S., Jayashankar, R., Usman, M., & Kapadia, M. (2023). The Importance of Multimodal Emotion Conditioning and Affect Consistency for Embodied Conversational Agents. arXiv, 2309.15311. Retrieved from https://arxiv.org/abs/2309.15311v2

Lim, S., & Schmälzle, R. (2023). The effect of source disclosure on evaluation of AI-generated messages: A two-part study. arXiv, 2311.15544. Retrieved from https://arxiv.org/abs/2311.15544v2

Poirier, S.-M., Huang, B., Suri, A., & Sénécal, S. (2023). Beyond humans: Consumer reluctance to adopt zoonotic artificial intelligence. Psychology & Marketing. doi: 10.1002/mar.21934

Suen, H.-Y., & Hung, K.-E. (2024). Revealing the influence of AI and its interfaces on job candidates' honest and deceptive impression management in asynchronous video interviews. Technol. Forecasting Social Change, 198, 123011. doi: 10.1016/j.techfore.2023.123011